RSA Conference Day 2: A bit of history

Our communities manager, Jon Ericson, is at the RSA Conference. Below are his reflections on the second day of the conference:

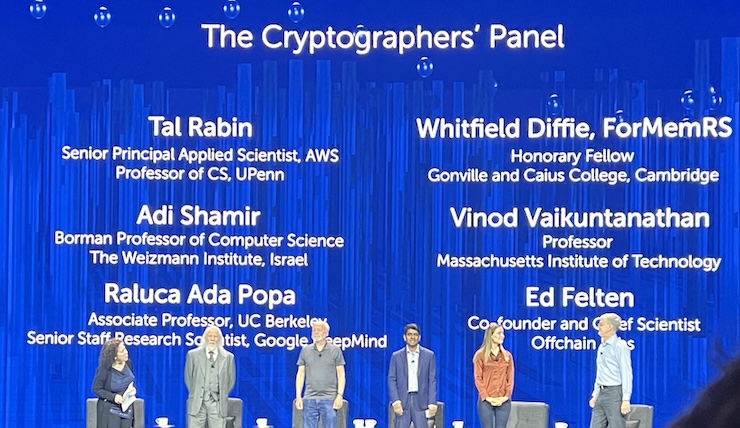

The Cryptographers’ Panel

From talking with people who have come to RSA many times, the highlight of the conference every year is The Cryptographers’ Panel, which includes luminaries in the field such as Whitfield Diffie, who pioneered public key cryptography and Adi Shamir, who co-developed the RSA cryptosystem. This year the topics included cryptocurrency, blockchain technology, AI and post-quantum cryptography. What I found notable was the level of disagreement on all sorts of questions:

- Has cryptocurrency been a net positive so far?

- Will blockchain technology be foundational to technological advances in the future?

- Can AI models be secure?

- How immediate is the threat of quantum computers?

One thing the panel agreed on was that now is the time to use a hybrid model of encryption that includes both a classic algorithm and a post-quantum algorithm. As of OpenSSL 3.5, hybrid ML-KEM is the default in TLS v1.3. Whit Diffie pointed out that collecting messages in order to decrypt them later is central to the way intelligence agencies operate. He speculated that the NSA has data going back to the Great War (WWI) and we know from the Venona project that messages can be held for decades until they are eventually decrypted. There’s no limit to how long a determined party might work to decode valuable messages.

Zero Trust at 15: The Evolution of Cybersecurity’s Defining Strategy

John Kindervag, Chief Evangelist at Illumio, and Jared Nussbaum, CISO at Ares Management, talked about their experience implementing the Zero Trust strategy. If you don’t know (and I didn’t until this week) Zero Trust means protecting data, services, applications and other assets by limiting access to only what is strictly necessary. Traditionally networks were set up so that everything inside the firewall was trusted to be legitimate and traffic coming from outside the firewall was treated with suspicion. But that means an attack needs only break through that outer layer in order to have access to everything inside the trusted zone. A better design is to carefully map what connections are needed and only allow those.

Obviously doing this will be a major disruption, so the best option is to do one piece at a time, make sure it works and iterate. Trying to do everything at once is a recipe for failure since it’s too much too quickly. Something is bound to break, finding the problem will be difficult and the only way to fix it is to rollback the work and (if there’s still a will to continue) try again.

The State of Venture Capital and Private Equity in Cybersecurity

Chenxi Wang from Rain Capital explained her investing edge comes from having a technical background, domain knowledge and an ear for products people enjoy using. That’s how she found ProjectDiscovery, which just won the Innovation Sandbox contest. While this echoes Peter Lynch’s “invest in what you know”, the twist is that technology companies have ways of dazzling observers who only have a superficial understanding. Listening to people who actually use a product allows you to evaluate it accurately.

Building Secure AI: How Open Source, Standards and Communities Lead the Way

This talk focused on the new risks being introduced by AI and how open source, standards bodies and communities can address the risks. Arun Gupta, Chair of the Open Source Security Foundation (OpenSSF) Governing Board, pointed out getting feedback from a wide variety of people improves the output of a project. Since it’s possible to make contributions that will fix your own problems, people are empowered to do just that. As I pointed out in my more-of-a-comment-than-a-question, it’s an example of the scientific method without so much of the credentialism.